White Paper

LLM Fine-Tuning

Using parameter-efficient fine-tuning (PEFT)

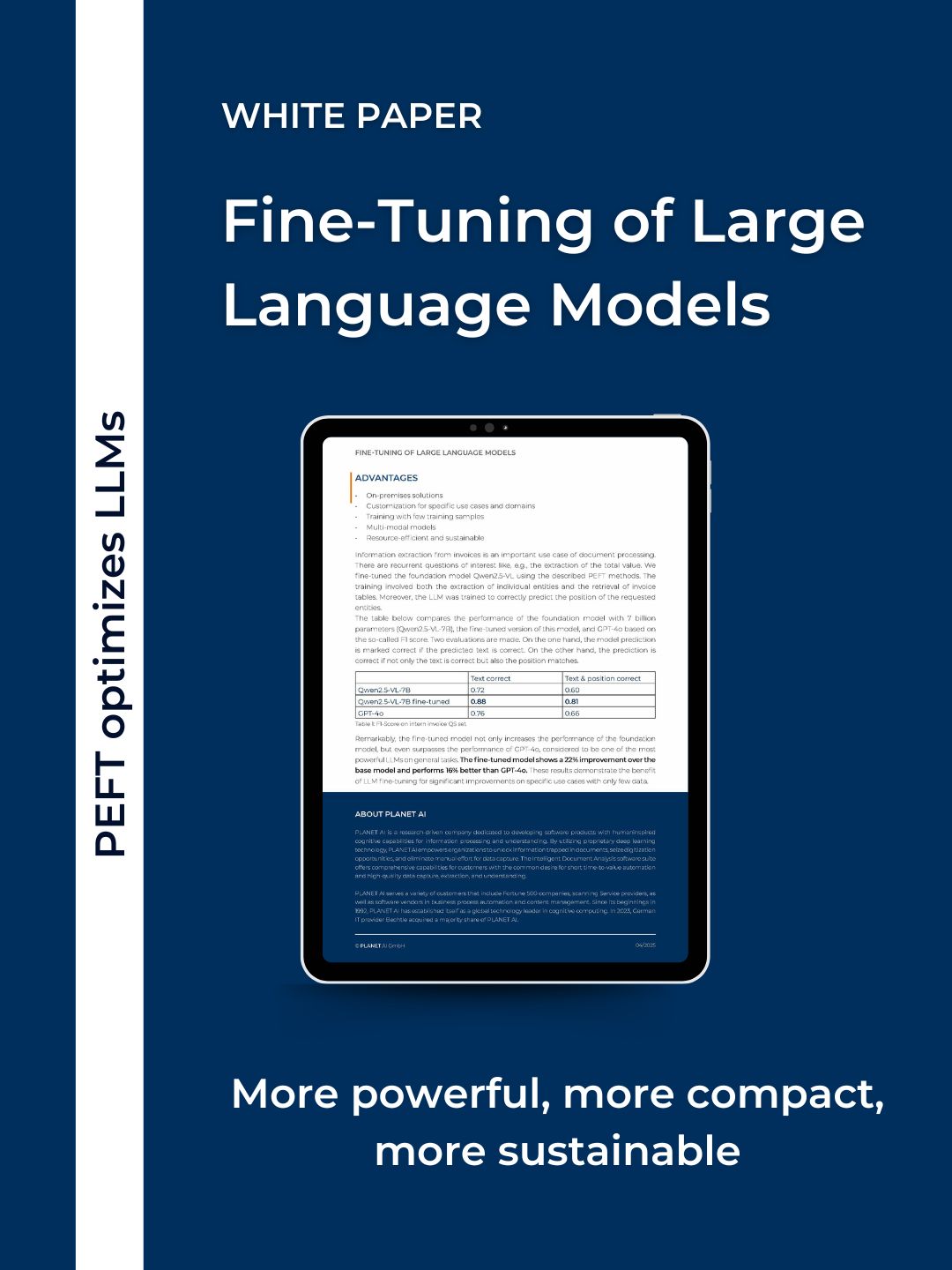

Large Language Models (LLMs), such as those behind ChatGPT, have broad capabilities but often fall short in specialized tasks. By applying parameter-efficient fine-tuning (PEFT), we significantly enhance LLM performance for specific use cases like invoice data extraction, using only minimal training data and resources. Our approach enables custom, on-premises-ready AI solutions that are sustainable, cost-efficient, and multi-modal (text and image). The adapter-based tuning architecture keeps the environmental footprint low while allowing flexible deployment.

Multimodal LLM-based engines: Qwen2.5-VL-7B, Qwen2.5-VL-7B fine-tuned, GPT-4o